Human computer interaction advancement by usage of smart phones for motion tracking and remote operation

Date

January, 2015

Conference

IEEE Scalcom 2014, Bali

Paper download rank

Download from IEEE Xplore Download Conference Presentation

Authors: Solely authored

Abstract: With the advent of faster home computers, growth and development of smart phones combined with advancements in computer vision, recent years have seen various research, homebrew and commercial projects aimed at enhancing human computer interaction by novel means. These methods involve both live video processing and transport of user operation to devices external to the computer. Various methodologies of object recognition, tracking and processing have been used in wide practical applications like traffic analysis, pedestrian tracking, gesture processing and mouse emulation. This paper presents a comprehensive and integrated method to emulate movement and standard features of a computer mouse in various scenarios of different lighting conditions and means of operation using OpenCV, a computer vision library; cvBlob, a blob analysis library; and B4A, a BASIC compiler and IDE for Android. The paper also compares operating success efficiencies in different operating conditions. A standard computer webcam is used to observe and track the user’s smartphone screen for movement and preprogrammed events signaling clicking, dragging or other operations. Unlike contemporary human computer interaction systems, the key advantage of this system is the non-requirement of any kind of network that connects the handheld device to the computer to achieve remote operation

Languages

Tools

This project from an idea I once worked on during my 3rd year in college. The idea was to emulate touch free mouse movement by finding the position of a laser pointer on an LCD screen and then emulating a click on that spot on the computer. A simple webcam and a simple device was all that was needed to work. There were problems like capturing a picture of the screen at the exact same time a flash flooded it. Also, it was found too heavily dependent of lighting condition. It was eventually dropped as a viable idea.

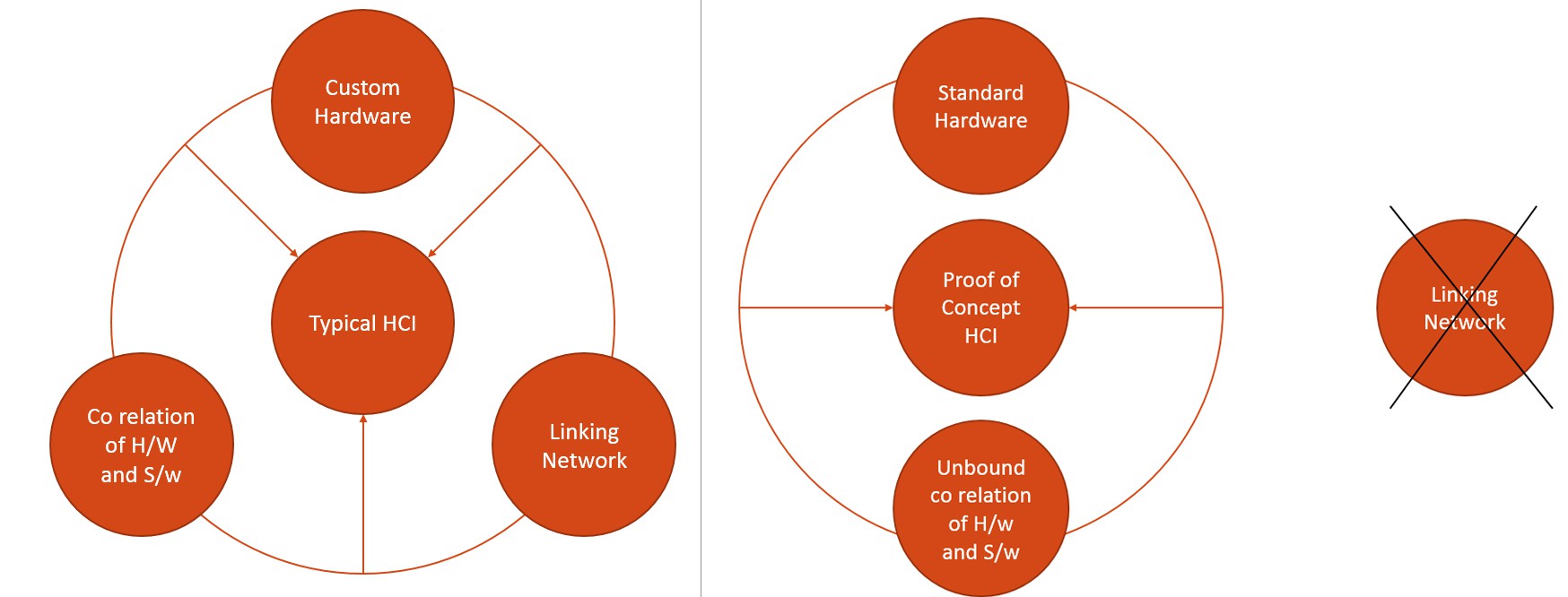

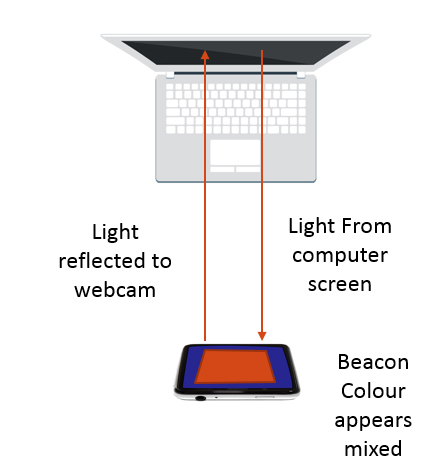

Around the middle of 2014, I began to think of the project again. I realized that the fundamental working idea of the project could be used elsewhere. After some thinking, I came up with an idea of using the phone screen as a beacon that can be tracked to emulate movement on the computer screen. My motives for this were to develop this proof of concept system which had the following advantages:

In case you didn’t get how simple this is. this picture should help you out:![]() I theorized that the system wouldn’t be too dependent on the lighting conditions as the target object was a close range light source. I began experimenting this method by first trying to isolate and track the brightest point on the screen. I used cvBlob for blob tracking. Here’s a picture of my first successful bright blob identification and tracking.

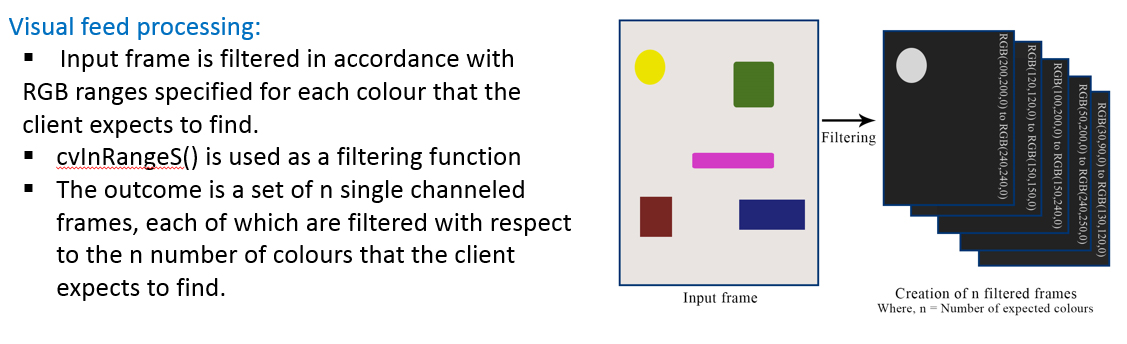

I theorized that the system wouldn’t be too dependent on the lighting conditions as the target object was a close range light source. I began experimenting this method by first trying to isolate and track the brightest point on the screen. I used cvBlob for blob tracking. Here’s a picture of my first successful bright blob identification and tracking. Smooth motion tracking proved that it was pretty much alright in terms of efficiency since the machine I was testing this on was only a standard machine at best. After a few revisions, I realized that I could actually pick up events as well. All I had to do was to multiplex the colour identification process to handle several colours at the same time. Each colour corresponded to different function. This could be done by separately channeling the colours:

Smooth motion tracking proved that it was pretty much alright in terms of efficiency since the machine I was testing this on was only a standard machine at best. After a few revisions, I realized that I could actually pick up events as well. All I had to do was to multiplex the colour identification process to handle several colours at the same time. Each colour corresponded to different function. This could be done by separately channeling the colours: *cvInRangeS() is cvBlob library function. It enables us to specify a range of RGB colours to look for. Understandably, the greater the difference in target colours, the “safer” the operation becomes. As in, the less likely it is for the program to confuse two colours.

*cvInRangeS() is cvBlob library function. It enables us to specify a range of RGB colours to look for. Understandably, the greater the difference in target colours, the “safer” the operation becomes. As in, the less likely it is for the program to confuse two colours.

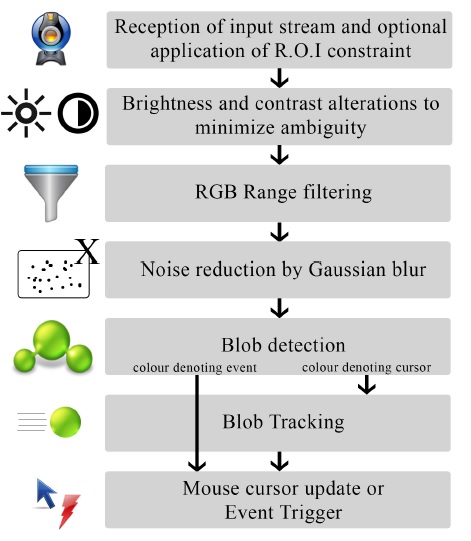

I’ve skipped a few steps which is kind of common to all computer vision software. Stuff like brightness and contrast control, setting up an R.O.I (in case you need to exclude certain zones from the sight of the webcam) and noise reduction. Anyway, the whole process is given here:

Results

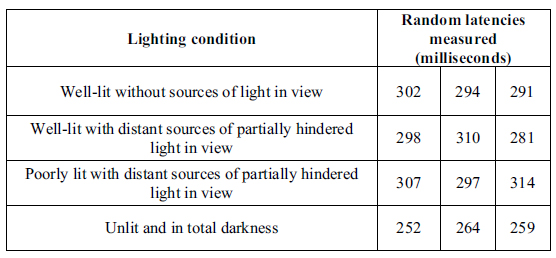

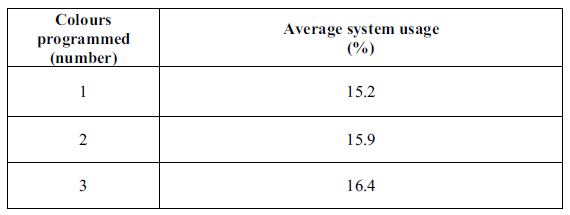

The whole setup worked pretty well in well lit and poorly lit conditions. Here are the latencies for a few test runs. Also, here’s the system stress as colours were added.

Issues

I came across a problem which seemed completely counterintuitive to the whole idea of trackig bright objects. Efficiency of tracking and ever it’s correctness fell in completely unlit conditions. A little investigation showed the reason behind this:

I’ve obviously been very brief in this explanation. Do check read the paper for full information on the whole system.